Artificial Intelligence (AI) is revolutionising industries, from healthcare to finance, bringing automation, prediction, and personalisation to unprecedented levels.

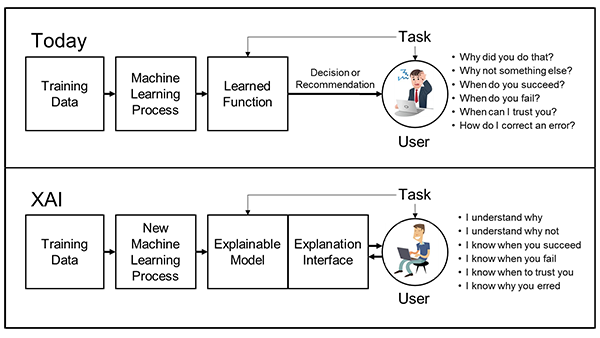

However, the widespread adoption of AI systems is often impeded by their 'black box' nature, making it difficult to understand the decision-making process of AI. Put simply, this means that it may not be possible to truly understand how a trained AI program is arriving at its decisions or predictions.

Enter Explainable AI, the practice of making AI transparent with easily interpretable results.

In this post, I will delve into the importance of Explainable AI, its implementation, and why AI should not be a cryptic oracle but a transparent tool.

Why Explainable AI is Important

Explainable AI is important for many reasons, including.

Trust

To adopt and rely on AI fully, individuals and enterprises need to trust it. Blind faith has no place in today's data-driven world. If a system recommends a business strategy, prescribes a drug, or even suggests a movie, users want to know why.

Collaboration

As AI becomes a collaborator in workplaces, colleagues need to understand each other. An architect designing a building with the assistance of AI needs to know why the AI suggests certain structures or materials.

Ethical Decisions

Without understanding AI's decision-making, how can one judge its ethical basis? AI, like humans, needs to be accountable for its choices.

Regulatory Compliance

In many sectors, especially those impacting human lives like healthcare or transportation, there's a regulatory need to explain decisions made by AI. Without transparency, these systems can't be used or would face heavy restrictions.

Continual Improvement

When we understand the 'why' behind AI's choices, we can refine and improve it. Without this, we're shooting in the dark.

Making AI Explainable

How does one make AI Explainable? Merely bolting on an explanation at the end won't do. This approach is akin to baking a cake and then trying to change its ingredients. AI systems need explainability baked in from the start.

Moreover, "explainability" isn't a one-size-fits-all concept. A crucial question is, "Explainable to whom?" The intricate data paths that make sense to an AI developer could be Greek to a business executive. A layperson doesn't need or want to understand the nitty-gritty of neural networks or decision trees. They need clear, concise explanations that align with their understanding.

In essence, explainability hinges on human cognition. Each person perceives and understands differently, coloured by their experiences, expertise, and context. Hence, AI Explainability should be adjustable, catering to the unique cognitive palette of every individual.

How to Implement Explainable AI?

There are many ways to implement Explainable AI, some common are listed below.

Feedback Loops

Incorporate feedback mechanisms where users can question AI decisions and get explanations. This iterative process not only aids understanding but also refines the model over time.

Choose Interpretable Models

Start with models that are inherently interpretable. For instance, linear regression, logistic regression, and decision trees are more easily understood than complex models like deep neural networks or ensemble methods.

Post-hoc Analysis

For models that are already developed, post-hoc methods can be employed. These involve analysing the model after it's been trained, using tools and techniques to break down its decision-making processes.

Training Data Transparency

Ensure that the data on which AI is trained is clean, unbiased, and representative. Transparency in data leads to transparency in models.

Tailored Explanations

Recognize that interpretability is subjective. What's clear to a data scientist might be opaque to someone else. Tailor explanations to the audience, presenting them in a manner they'll understand.

AI Logging

Automatic logging of the AI input & output before its mixed with human content and traceability becomes impossible. With this level of logging, there is a clear audit trail to help decipher how a published output came to be.

Cite Sources

By instructing the model to cite the source material when it makes statements, those statements are much more likely to be grounded. Asking for citations makes it so that the model must make two errors every time it generates a response: the first error is the fabricated response, and the second is the bad citation.

Conclusion

As businesses and consumers increasingly intertwine their decision-making processes with AI, they must advocate for clarity and transparency, ensuring the AI output is explainable for everyone.

Explainable AI is not just a good-to-have feature; it's a necessity in fostering trust, compliance, and fairness.